Evaluating our DNALC Inside Cancer website

Every multimedia developer is from time‐to‐time faced with the difficult question from a board member, critic or funding body: “This program is all very nice, but can you prove it actually helps students to learn?”

This year, we found the answer.

As part of my job as a producer at the DNA Learning Center, I evaluate our suite of resources, including websites, teacher training workshops and apps. We recently completed the evaluation of our cancer biology website, Inside Cancer, which included conducting experiments in 2010–11 to see if the site improves student learning in genetics and cancer biology.

There is increasing pressure on us to go beyond anecdotal reports of teacher satisfaction with new resources – to sophisticated studies of how those resources impact students in the classroom. This is a big task, considering that website developers seldom have direct access to students to assess program impact or effectiveness. Evaluation studies require much effort devoted to recruiting teachers and insuring that they generate matched sets of comparable student data. Learning takes place in complex environments with many interacting institutional and personal variables, and controlling for these is daunting.1

In our study, five high school and college teachers from five states were selected from Inside Cancer teacher trainer workshop attendees. These teachers used Inside Cancer to teach 297 students in 13 classes at three high schools (two public and one Catholic girls-only school), a 2-year college, and a 4-year college. The institutions were in city, suburban, and urban locations and ranged from 250 to 10,000 students, with an average class size of 24 students. High school classes involved in the study were biology (n=94), health (n=37), and biotechnology (n=60). College classes were environmental science, nutrition, life science (n=57) and online biology (n=49). Topics taught included hallmarks of cancer, effects of smoking, cell signaling, mutations, and oncogenesis. Teachers used Inside Cancer in class, or as a resource to complete an assignment and quiz. The median age of student participants was 18.4 years and 71.0% were girls.

To control for differences between teachers and students we used a crossover repeated‐measures design, in which each student participated as both an experimental and control subject.2 For the first topic, class A used a DNALC Web site for classwork, and class B used lectures, textbooks, or other Web sites (Figure 1). The classes then switched conditions for the second topic, so that each student learned one topic using a DNALC Web site and one topic using another resource. Students completed a quiz after each topic, which allowed comparison of how well each student learned with and without the use of a DNALC Web site. This study design allowed us to examine how Inside Cancer was used in different cohorts of students in different settings.3

Figure 1. Crossed, repeated-measures study design for evaluation of InsideCancer.org.

|

|

Topic 1 |

Quiz |

Topic 2 |

Quiz |

|

A Classes (n=148) |

Inside Cancer |

1 |

Other resource(s)* |

2 |

|

B Classes (n=149) |

Other resource(s)* |

1 |

Inside Cancer |

2 |

* Included PowerPoint lectures and other websites, such as NIH.

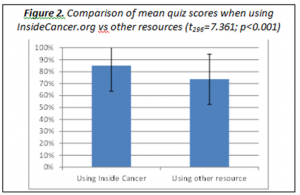

Strikingly, students’ quiz scores were significantly higher when using Inside Cancer: 85.0% ± 20.8 versus 73.8% ± 21.3, t296=7.361, P < 0.001 (Figure 2).

Thus, we now have a practical and supportable answer to that difficult question: an engaging website can potentially increase student learning by about one letter grade.

So go check out Inside Cancer – it may well help you increase your grades!

This blog post is based on an excerpt from a paper that appears in the December 23 issue of the journal, Science .

1) L. Cohen, L. Manion, K. Morrison, Research Methods in Education (Routledge, Oxford, ed. 6, 2007).

2) B. Jones, M. Kenward, Design and Analysis of Cross‐Over Trials (Chapman and Hall, London, 2003).

3) Papay, J. Different tests, different answers: The stability of teacher value-added estimates across outcome measures. American Educational Research Journal 2011;48(1):163-93.

Figure 1. Crossed, repeated-measures study design for evaluation of InsideCancer.org.

|

|

Topic 1 |

Quiz |

Topic 2 |

Quiz |

|

A Classes (n=148) |

Inside Cancer |

1 |

Other resource(s)* |

2 |

|

B Classes (n=149) |

Other resource(s)* |

1 |

Inside Cancer |

2 |

| Print article | This entry was posted by Amy Nisselle on December 22, 2011 at 12:46 pm, and is filed under Inside Cancer. Follow any responses to this post through RSS 2.0. You can leave a response or trackback from your own site. |